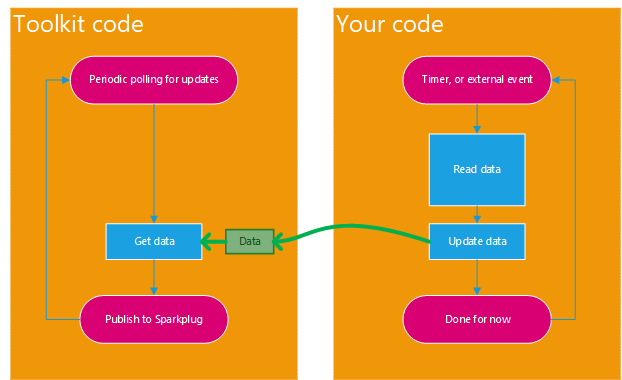

In the push data provision model, you write an independently running code that gathers the data from the underlying system and stores (pushes) it into the Sparkplug metrics. Rapid Toolkit for Sparkplug then simply uses this data whenever the data needs to be published to Sparkplug (it does so by checking for data that have changed periodically).

Rapid Toolkit for Sparkplug retrieves the data (for Sparkplug publishing) from the ReadData Property of the metric object. The task of your code is to store the data into this property, using the UpdateReadData Method. When and how it is done is up to you, and depends highly on the specifics of the Sparkplug edge node you are developing, and its devices. In many cases, it can be based on some kind of periodic timer. In other scenarios, it may be triggered by the underlying system or connected device.

When the push data provision model is used, it cannot be guaranteed that the data published to Sparkplug is "up to date", i.e. collected at the time it is published. This is because there is effectively a "cache" in the form of the ReadData Property in the metric object between the underlying system and the eventual contents of the Sparkplug that gets published, and the requests are fulfilled from the cache, and not by actually reading from the underlying system at that moment. The push data provision model can therefore be only used in applications where this behavior does not pose a problem.

Conversely, the advantage of the push data provision model is that the timing of periodic publishing to Sparkplug can be easier made more regular, as the code that retrieves the data from the underlying system cannot "block" the publishing loop.

The following picture illustrates how push data provision model works.

Only the data that have changed are included in the Sparkplug payload and published. This logic is implemented internally in Rapid Toolkit for Sparkplug.

When using the push data provision model, in general, you are responsible for providing the initial contents of the ReadData Property. Make sure you have a reasonable contents even before your code first gets a chance to push the "real" data to the metric. WIthout further configuration, the ReadData Property contains empty data - meaning that there is no timestamp, and the value itself is null. This may not be what you want - especially with non-nullable data types where null is not even a valid value for the data type of your metric; a conversion error occurs in such case, see Rapid Toolkit for Sparkplug Producer Error Model. You always need to specify initial data that make sense for your Sparkplug edge node or device.

Many extension methods for Sparkplug Metric Configuration already initialize the ReadData Property at least to the default value for the given data type. This assures that the initial value is valid for that data type, but it still may not be the "right" value for your edge node or device. Some metric configuration extension methods (such as the ReadWriteValue Method) allow you (and force you to) specify the initial data (or just the value) directly as an argument in the method call.

You can configure how often Rapid Toolkit for Sparkplug polls for the data, using the PublishingInterval Property on the edge node and each device.

The way the polling interval is configured is the same in the pull and push data consumption models. For a code example (although with the pull data provision model), see Examples - Sparkplug Edge Node - Set polling interval.

The data collection that your code performs may need to be started and stopped together with the edge node. To achieve this, you can tie it to the Starting and Stopped events of the edge node, device, or metric object. The following example illustrates this approach.

Sparkplug is a trademark of Eclipse Foundation, Inc. "MQTT" is a trademark of the OASIS Open standards consortium. Other related terms are trademarks of their respective owners. Any use of these terms on this site is for descriptive purposes only and does not imply any sponsorship, endorsement or affiliation.